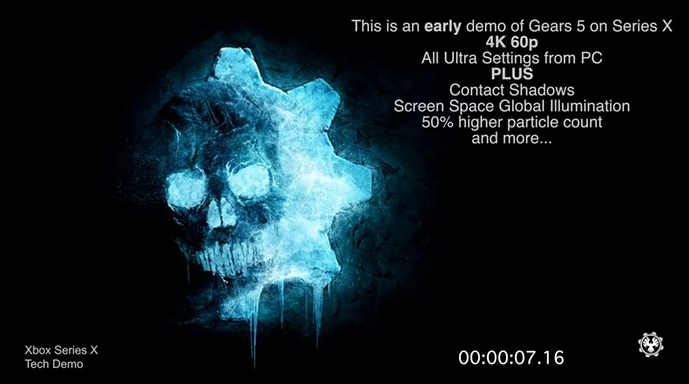

Indeed cause with such XSX specs, for this game it should do 4K60RT easy

I heard the game literally ran at 4k60 on One X, how does a significantly more powerful Series X cut the frame rate in half just because of Ray tracing, makes no sense to me.

At least it should be 1440p/60fps.

Are we seeing forced parity here?

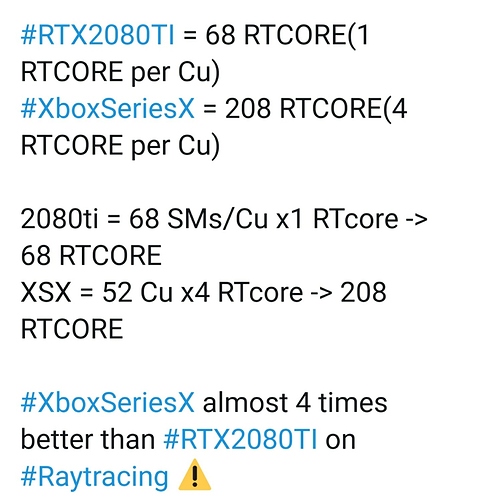

I said it before I don’t think XSX can’t handle 4K60RT cause it has separate dedicated cores 13tf hw acceleration next gen RT while PS5 is way less capable and has not that

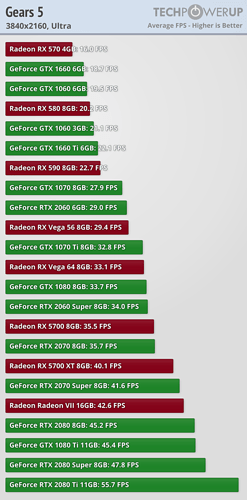

RT is expensive and depends on what is implemented and how. I find it annoying when people say stuff like ‘series X should be able to handle this at X resolution and frame rate easily’. It’s pretty meaningless. You don’t know. Until we see benchmarked PC specs running a game at these settings it’s just blind guesswork. And even then the console dev environments and tools might be less mature and hence mean that some efficiencies are lost.

I agree, but really, One X already ran this at 4K60, you really telling me 6 extra TFLOPs, dedicated RTcores AND all that new next gen features only amounted to literally halving the frame rate on Series X anyway?

Yep I can confirm you I have it on my X1X and running 4K60

Expensive when you don’t have dedicated RT cores. Feel free to check then. There is nothing meaningless

Microsoft: Xbox Series X Performance Is 25+ TFLOPs when Ray Tracing; I/O Rate Equal to 13 Zen 2 Cores

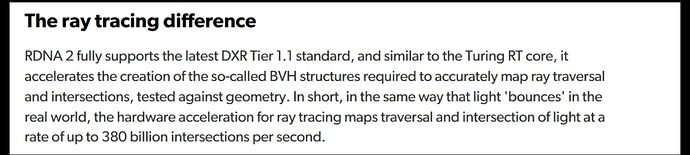

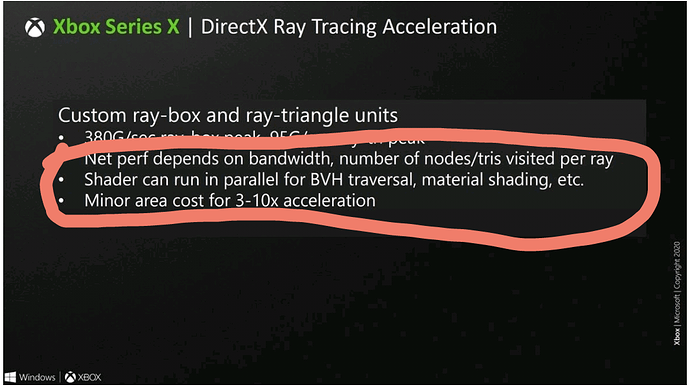

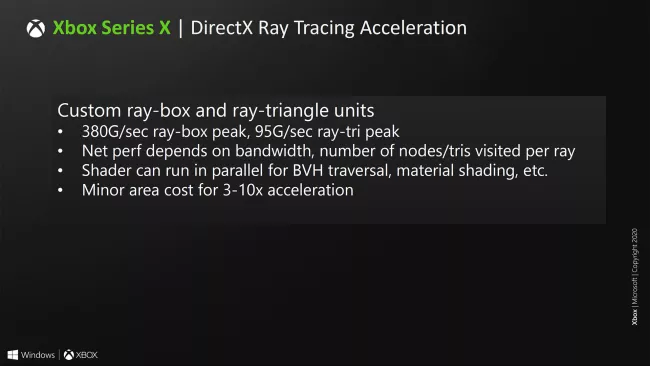

"Without hardware acceleration, this work could have been done in the shaders but would have consumed over 13 TFLOPs alone. For the Xbox Series X, this work is offloaded onto dedicated hardware and the shader can continue to run in parallel with full performance. In other words, Series X can effectively tap the equivalent of well over 25 TFLOPs of performance while ray tracing.

Xbox Series X goes even further than the PC standard in offering more power and flexibility to developers. In grand console tradition, we also support direct to the metal programming including support for offline BVH construction and optimisation. With these building blocks, we expect ray tracing to be an area of incredible visuals and great innovation by developers over the course of the console’s lifetime."

Is it safe to assume this doesn’t have smart delivery then?

Guess I’ll just buy Vergil and continue with the Xbox one version, it’s still 4k60fps, I can live with that I guess.

Is there a link for this? I’ve been trying to figure out what resolution the 120fps mode is running at. My guess would be a dynamic 4K.

There is no such thing as forced parity through money hats. This was probably the easiest way to reach their performance targets.

Can you explain what this mean? Considering both Sony and MS allow low level access to the RT hardware in both systems, I’m struggling to think how this would work.

Please don’t spread misinformation. For one the Series X is not almost 4x better at ray tracing than the 2080ti. This is a lie spread by Xbox fans on twitter, so I wouldn’t fall for it. Native 4K60 with Ray tracing won’t be a thing this gen.

Edit: apologies if this came off harsh. I understand the 25TF number came from Microsoft but it’s not an accurate description of the performance we’ll see next gen. It’s marketing speak and little more, so I wouldn’t use it as a metric of what the RT performance will truly be like.

To be fair, that last paragraph was taken directly from the Digital Foundry Xbox Series X reveal video. While I’d agree that we all need to sit back and see what the real world results look like, Microsoft themselves put out some bold claims to DF regarding their ray tracing capabilities. Hard to do an apples to apples comparison of the next gen efficiencies vs existing tech.

I also belive “ray tracing” is being used as a catch all to refer to features when there’s entirely different levels of applying it. Full path ray tracing like what’s shown in the Minecraft video is exponentially more expensive than ray traced puddles. I don’t expect native 4K/60 ray traced games either but with upscaling and variations of ray tracing, it’s going to be blurry messaging on that stuff.

True, you’re right.

I’d like to ask, why this game states that PS5 will have ray tracing at launch but will come to Xbox in a post launch patch. Is this a Sony money hat or something else?

I still look at ray tracing on the series X and muse that the only launch game that is said to have RT is Watch Dogs. Everything else either doesn’t, like Gears Tactics or like the medium isn’t dated yet. My Spidey senses say that RTX isn’t really quite ready for launch…is that why Halo Infinite was talking about a post launch RTX patch?

How about Dirt 5 does that support RTX?

If I’m right I wonder if WD will quietly state at some point that RTX will come to Xbox series S/X post launch?

it’s not marketing, it’s just not being interpreted properly here. What that 25TF was referring to is the fact that to get the equivalent raytracing performance without dedicated RT cores, they would need to use 13TF more GPU power just for that raytracing alone, so that would be an equivalent of 12+13 = 25TF traditional GPU compute. But all that 13TF worth of extra work is now offloaded onto dedicated RT cores to do all the raytracing related work while the base 12TF does the rasterization like usual.

30fps on DmC V is a heresy. Even on Original Xbox the is game is 60fps.

Should have a limit for where developers can go just for “reflections on water puddles”

Thank you!

But Capcom is giving the user options though. If someone would prefer to play DMC V at 30fps for ray tracing and 4K, then that’s their decision.

Unfortunately that’s where it is marketing speak because you’re not getting the full 12TF of rasterization performance when using RT because aspects like the BVH traversal are handled on the shaders. Basically performance of the GPU needs to handle parts of the RT process that would otherwise be handling normal rasterization.

If what everyone here was saying were true, we should not be seeing a GPU related performance drop when enabling RT but we will be seeing a performance drop when enabling RT. This performance hit also won’t be universal. Even if we look at something like RT reflections between two games, the performance will vary based on multiple factors, including the number of objects included in the BHV. Like everything else with game development, there is no one size fits all approach and things are a bit more complicated than they appear on the surface. This is why I wish Goossen didn’t put that comment out there since it’s being interpreted in ways he may not have realized.

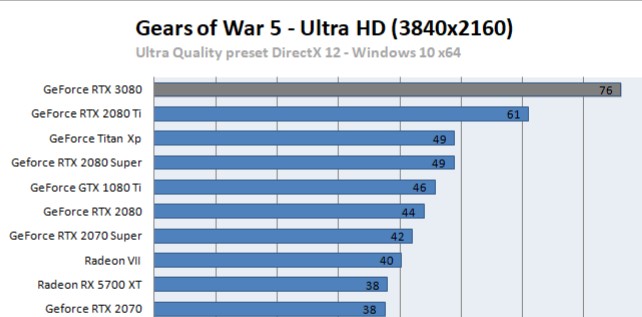

4x the 2080ti is misrepresented afterall. If anything - XSX can only come close to it. Here’s how the performance is on 2080ti. Minecraft!

- native 1080p 60

And we all know that XSX Minecraft demo was only doing native 1080p with frame rates between 30-60. Those frame rates can be low 40’s or high 50’s. We may never know. And also, it was an early build with not so much man power put into the development.

But there are some things on XSX RT implementation.

XSX does support parallel working of RT cores and shader cores.

As per hot Chips -

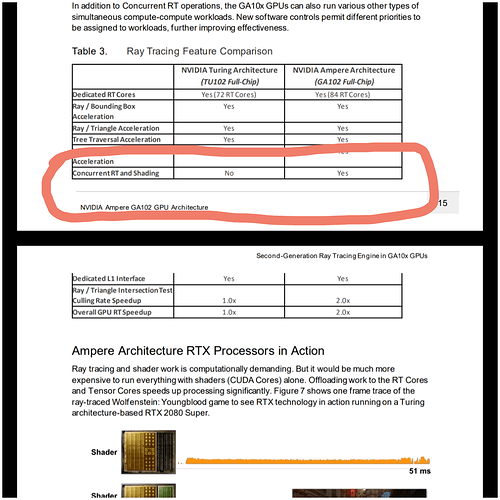

But Turing can’t do concurrent RT and shader calculations.

As per Nvidia white paper -

XSX may come out on top or maybe equal to 2080ti RT performance afterall🤔