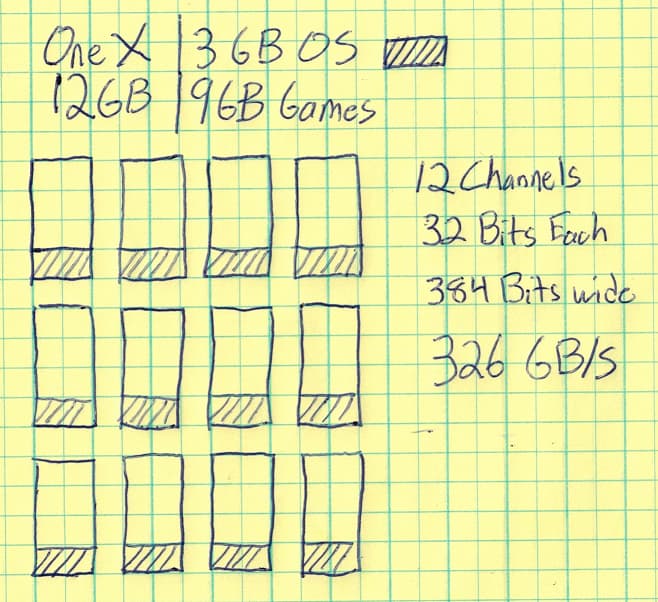

About the One X memory bus. It has a typical memory bus layout – all segments are addressable and offer the full speed. It has a 384 Bit memory bus. It has 12 GB memory total, where 3 GB is used by the OS and 9 GB is accessible to games.

It can be thought to have 12 Channels of 32 Bits each. Here is a rough picture to help you visualize how the full speed is available to games.