I know this article but even here in the quote from Eurogamer article, you can clearly read it is speculation on their part “It is reasonable to assume that (…) somehow” It is not a confirmation from their part at all. The terms they use are highly speculative, reasonable assumptions not confirmations.

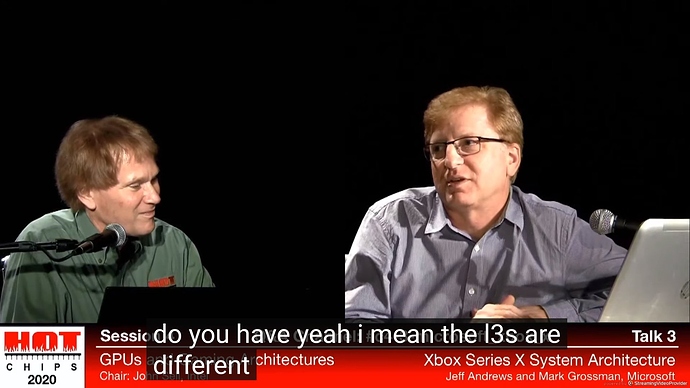

And it shows they didn’t probably see the video conference but only the powerpoint cause it was not available at the moment, otherwise they would have mentionned the L3 question thing with Jeffrey Andrews cause it logically raises eyebrows.

Also you heard it clearly in the video I posted with the exact timestamps at 33:30 when Jeffrey Andrews CPU architect spoke about AMD NDA about the cache, I’m not fabricating it.

Here the timing and chronology is really important to have a better understanding.

The HotChips video conference is from a date way anterior to the RDNA2 conference. That is why they had to wait for AMD to reveal RDNA2. The RDNA2 officialisation was on the 28th of october. Maybe they now can talk about it. Maybe…

MS clearly said all the RDNA2 features showed (including Infinity Cache feature) by AMD were hardware supported by Xbox Series X/S

Infinity Cache is an RDNA2 feature, Xbox Series X/S are the ONLY next gen consoles with FULL RDNA2. Meaning XSX has Infinity Cache…It is clear. I already brought the official source above posts earlier. “Xbox Series X|S are the only next-generation consoles with full hardware support for all the RDNA 2 capabilities AMD showcased today.”

AMD showcased Infinity Cache this day as a RDNA2 feature…So voilà. ![]()

Btw for the 128MB where did AMD said it was mandatory to have 128MB? Which is why it’s the same size across all RX 6000 GPU revealed so far. But who says the size needed to be the same?

L3s of the SX CPU is 4MB per CCX compared to 16MB on desktop Zen 2. Right? Meaning that’s 1/4th the size.

So why couldn’t the XSX have an Infinity Cache 1/4th the size of what AMD is putting in desktop RX 6000 GPUs also? So 32MB infinity cache.

Nobody answered my questions before in my precedent post about MS using the same strategy ![]() why would they change strategy then now? Seems to have the same function as below:

why would they change strategy then now? Seems to have the same function as below:

“On-chip caches for GPU usage are not a new idea, especially for AMD. The company included a 32MB eSRAM cache for the Xbox One (and Xbox One S) SoC, and even before that the Xbox 360 had an on-package eDRAM as well. But this is the first time we’ve seen a large cache on a PC GPU.”

The infinity cache seems like an updated version of what MS did previously on X360 and Xbox One with eDram & eSram. That’s why it might be really useful for full efficiency on the XSX like I quoted above “allowing the GPU to more quickly fetch data rather than having to wait around for it to come in from VRAM” for example.

The infinity cache plays the role as a massive bandwith amplificator.

Microsoft consoles have provided the biggest hardware and software gaming technologies to the industry, which Microsoft has always shared with and used to advance Windows (DirectX) and PC hardware needs. They continue doing it with bringing new tech to PC world so I’m not surprised.

Maybe someone can ask Jason Ronald or Jeffrey Andrews or Lisa Su about the Infinity Cache and its relation with XSX.