If I get you right you are saying that as the OS and game allocations are spread across all 12 channels, theoretically the game can access the entire bandwidth? But the OS is always going to be taking from that bandwidth. It’s the same bus. What am I missing?

It’s beyond theoretical. It’s practical. It’s what happens.

It’s what would happen if the OS was turned off, right? But the OS is always using that same bus, taking potential bandwidth away from the game?

There are two different things.

Another runnning app or operating system process will always take ressources away (memory, bandwith, processing power), yes. But why is your operating system hogging so much bandwidth, what is this thing doing??

The other point is the different memory bus layout between Xbox One X and Xbox Series. This has nothing to do with OS processes, its entirely on the hardware level. If a game accesses memory which is on the slower chips it gets less bandwith.

It uses the same bus, but the OS does not always operate. The aspects of CPU/GPU reservations is beyond the scope needed to be discussed for memory bandwidth.

For inclusion here are the Xbox One generation resource reservations (taken from my post at B3D):

Yeah, the important thing to stress wrt VRR and how it works with diff framerate ranges is the frametime instead of framerate. Your eyes/brain don’t care about framerate, they care about frametime. A 1 fps drop in a 30 fps game equates to a 2 fps drop in a 60 fps game and you will be much more likely to notice such drops in lower framerate games.

My personal hope is that once the DLSS equivalents get used for Xbox Series X and PlayStation 5, that every game is a standard 60FPS as developers should have more resources available for the frame rate to be a steady, solid and consistent 60FPS for the entire game.

Kinad funny that DF will have to analyze Tomb Raider soon…again.

PS5 has no ML acceleration to do ML super res. You won’t get equivalents of DLSS there. Instead, the devs will likely focus on offering fidelity and perf modes tho imho.

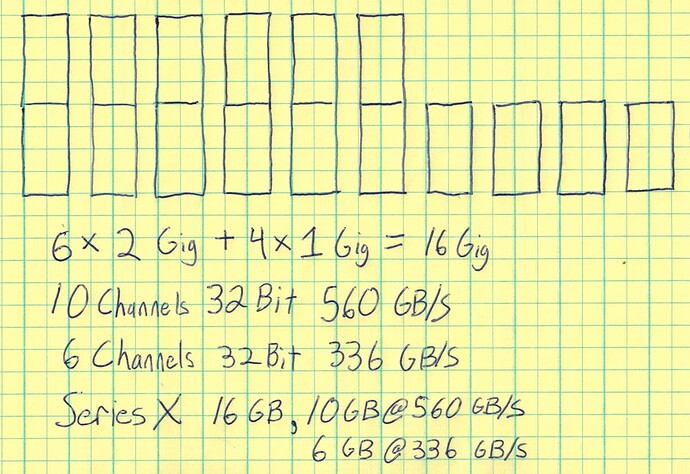

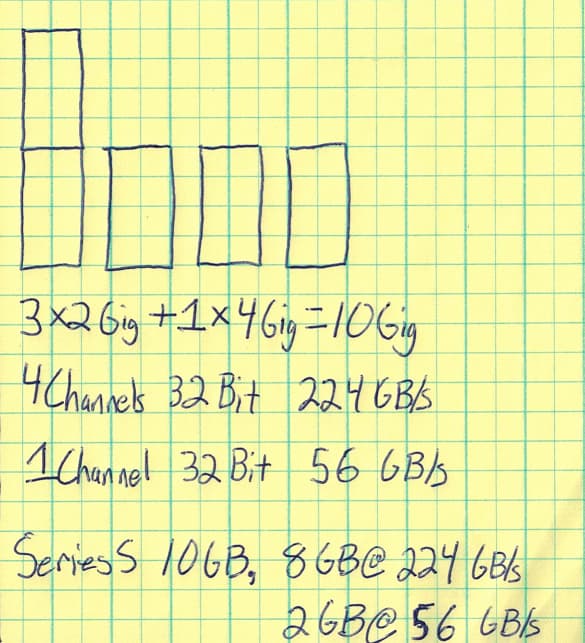

To be inclusive on the Memory Bus and Bandwidth discussion, here’s rough pictures to help visual the different Series X and Series S layouts. {{ Apologies for not wanting to figure out how to proportionally shade 41.66% of the 6 blocks for OS memory use in the Series X on a Friday }}

Xbox Series X has a 320 Bit memory bus. It has 16 GB memory in total. It has 10 memory chips, 6 of them at 2 Gig and 4 of them at 1 Gig. This is how it gets its unique memory size and bandwidth segments. It can be thought to have 10 Channels of 32 Bits each for 10 GB at 560 GB/s. It has 6 Channels of 32 Bits each for 6 GB at 336 GB/s.

On the Series X, the set of 6 memory channels of 6 GB at 336 GB/s is used for Games (3.5 GB) and OS (2.5 GB).

Xbos Series S has a 128 Bit memory bus. It has 10 GB memory in total. It has 5 memory chips, 3 of them at 2 Gig and 2 of them Clam-Shelled to act like a single 4 Gig. This is how it gets it’s unique memory size and bandwidth segments. It can be thought to have 4 channels of 32 Bits each for 8 GB at 224 GB/s. It has 1 Channel of 32 Bits each for 2 GB at 56 GB/s.

On the Series S, the single memory channel of 2GB at 56 GB/s is used for the OS (2 GB).

Doesn’t PS5 support that AMD Super Resolution or whatever it’s called? Series X is my primary gaming console so for multi-platform games, it won’t matter but I was hoping Sony would have their studios use that to get every game at 60FPS with HDR and Ray Tracing at high settings instead of having a visual mode and a performance mode.

AMD super resolution is not a DLSS alternative.

What’s AMD Super Resolution then?

previous gen consoles also support amd super resolution. It doesn’t use machine learning like dlss. Its closer to compare it to the UE temporal upscaling

People don’t like comparing it because it isn’t ML based and does not produce as good of a result. SR is not hugely different than the upscaling already existing in some games.

AMD FSR is an upscaler or filter, like Lanczos.

Actually it’s a very misleading term. It’s not technically super resolution if it’s not creating/generating/adding “new information” which is only possible using machine learning/deep learning techniques.

if you upscale a image in Photoshop with a good quality filter and add a slight sharpening afterwards you are pretty close to the result from AMDs super resolution.

this is completely different than modern temporal upscaling algorithms.

Correct me if im wrong but SR doesn’t sound like it’s going to do much then? Is it just another form of upscaling because that’s what it sounds like to me?

So basically, it is another form/type of upscaler.

Why exactly then is SR being made a big deal then? Or is it a big deal and im just not understanding?