PS5 seems to have a performance advantage in 120fps mode and SX has a very minor 1fps advantage in RT mode.

Im starting to think cernys secret sauce is the real deal.

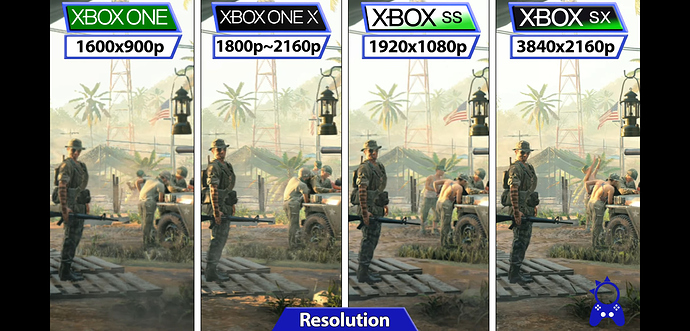

It’s nothing to do with secret sauce. Firstly we need to see the resolutions because it may be that the series X is somehow targeting slightly too high dynamic res - this happened on the one X constantly and the Pro would have a performance advantage.

Secondly the Xbox dev tools are behind and as of a month ago the gap you’d have seen between versions would have been far larger. Xbox have played a long game, Sony have banked on being there at launch. But for example when ML starts to be critical in the AMD version of DLSS Xbox will have an advantage due to their patience.

And frankly given the tiny differences in framerate right now I’d rather that.

Dam… i was thinking that earlier on, I guess ive been influenced by other people online. Yeah its why I didn’t make a thread on the subject because of the reasons you said (resolution + effect differences)

I think it’s most likely DirectX performance issues and lack of time with dev kits. Like I say we were not very many days away from watch dogs not launching with ray tracing on the Xbox side and ACV potentially having to be locked at 30 since it wasn’t hitting 60. The API and dev kits came in hot.

Be good if some of the developers on here could verify this but I guess digital foundry kind of have anyway.

Watch Dogs? You mean DMC5.

MS really should have tempered their “Most Powerful Console” marketing.

I will blame D12 performance as well.

It has some issues which makes it underperform when compared to dx11.

Also, i am shocked to know that APIs and software could have this much effect on performance.

Basically eatng way 10 to 15% of performance.

MS waited for Full RDNA 2, and dev tools will catch up. I wouldn’t worry about it. Just enjoy the games, and we’re in for a great generation. ![]()

To be fair the majority of there marketing has been “the most powerful console we have ever made”. However im still convinced the seriesX is the more powerful hardware. Ever since the specs of both consoles were known I expected a 100p or 10% fps advantage. A unoptimized SDK could absolutely take away 20% performance.

Do you have a source for this?

I know dev kit issues can cause 20% performance decrease because the og xbox onte improved about that much later in the gen.

Ok, so you experienced this developing for the Series X or for the one, correct?

No, and I never implied that I did.

Oh, you just dropped that 20% figure like it was something you experienced personally. Is that documented somewhere then?

No i didnt. What are u talking about?

Sorry, I just assumed since you didn’t cite a source it was based on personal experience.

Why dont u just ask why I think what I think instead of insinuating all these stuff?

I mean it’s obvious from the games that X1 performance improved since launch e.g battlefield 4 was 720p at launch and battlefield 1 was 900p on average, same with call of duty titles.

I asked if you had a source and you replied with “I know dev kit issues can cause 20% performance decrease because the og xbox onte improved about that much later in the gen.”. Was just curious how you know that figure.

Just look at the games, and how the visual metrics improve…