One thing I’ll add is that the xbox one s had a gpu clock of 914mhz, which is about 14% higher then the PS4’s 800mhz, yet it didnt really close the gap.

I explicitly posited “Given identical game states and a fixed player input.”

Anyway I think you’re getting hung up on somehow predicting the next instruction. That’s not necessary. All that’s necessary is for the execution of the same instructions to result in the same changes to clocks each time they are executed.

Edit, I misread your last sentence. I strongly doubt Cerny is intentionally misleading everyone about this, telling people in public during a GDC talk that the clocks are deterministic but actually they aren’t.

All signs point to more expensive than series X. Huge misstep by Cerny.

I wont be jumping In Day 1 or pre-ordering this myself, too many unknowns! No teardown as yet, they haven’t even given the product dimensions. I’ve a suspicion they’re withholding information that will raise any questions!

To be fair I do think something happened at Playstation for the system to be like this. I don’t know if it was suppose to be less powerful and release last year but there was a huge pivotal moment when Shawn layden left and Jim Ryan took over. I think cerny is just making the best out of a shitty situation.

Ok, seeing as smarter people have discussed the silicon angle, allow me to approach the design aspect. So first off, sheet metal worker. I’ve worked on at scale projects but nowhere to the size of console sales but the fundamentals are the same.

From the word through some in the media, Sony changed their target during production. Maybe due to hearing what Microsoft were aiming for. Reason matters not.

From day 1, you have to decide what the budget is for your project. You allocate from there to components (silicon, software, hardware, peripherals, games) and start determining what is available to you for delivery inside your schedule.

For Sony, if the target change happened, the first thing to change is the target. The flow on effect happens from there. How do you hit the new target? How will it effect the work already done? What can you salvage? Does this require changes across the other components? If you have started first batch proto manufacturing, can you repurpose the components to reduce bumps in the plan?

For dealing with custom pc components in products I have worked on, heat is the biggest issue. Ambient temperature ranges, vented heat, air flow requirements, fan placement to acoustic management. It all gets thrown out the window when the hardware changes.

So yeah, this new heatsink design has some pc roots and has the potential to help keep consistent heat draw away from the silicon. It is quite a good method all being honest. The big questions will be around the fan draw in for air and venting.

Hi, Just a tip : change the thermal paste. Did that this weekend on my One X, it’s whisper quiet now. And it used to shut down every other day from heat. Now, it’s like brand new.

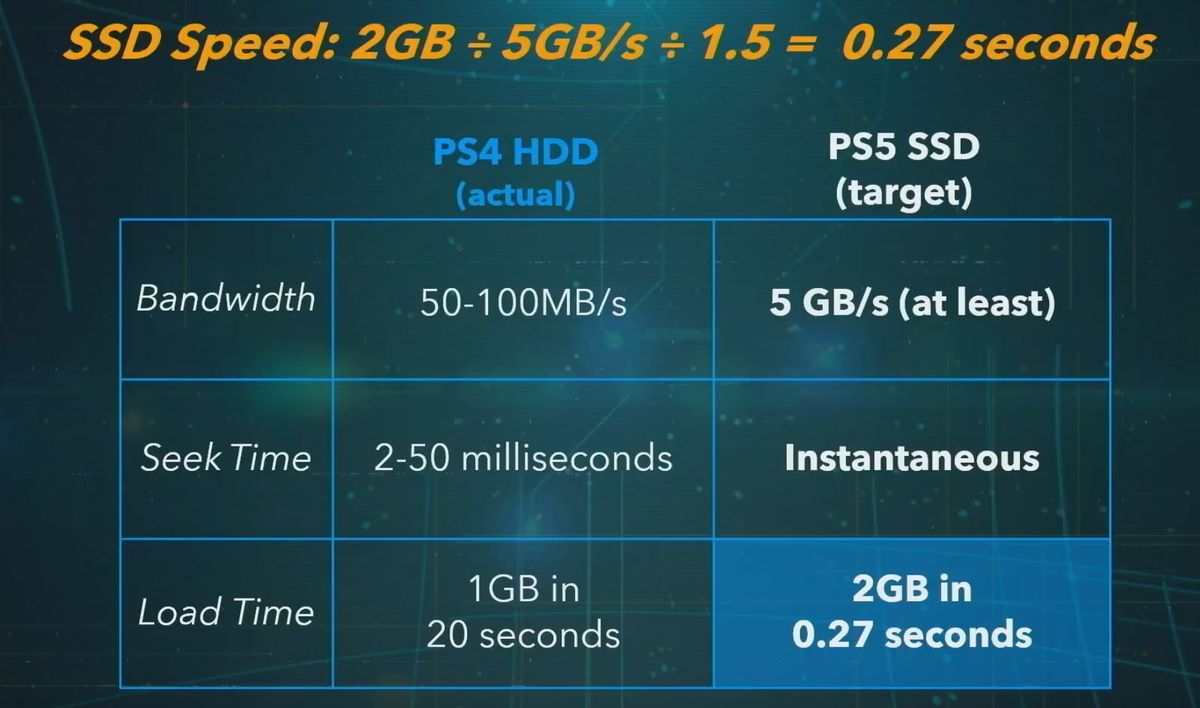

I had a question: Could the PS5’s SSD also be working with “variable speed” the same way the GPU is? What I mean is they boosted the speed of the SSD but it will throttle back if loads or heat become too high.

Thats a big question I have with ps5 that I don’t have with Xbox. With Xbox we know the sustained speed but ps5 we know its max speed. Its part of the reason xbox went with proprietary ssd so they can manage the heat. Ps5 is letting us use off the shelf ssd( certified) but how are they dealing with the heat. The longer they keep the ps5 information hidden the more I worry about it. Feels like a lot of smoke and mirrors.

It’s not hard for a developer to see where the bottleneck is in their debug tools. Not sure if it’s still the case but 360 dev tools allowed you to follow a single pixel through the pipeline if you wanted. Plus I’m sure a developer will know if you come over a hill to a huge battle, it’ll likely be taxing the CPU more with all the draw calls and AI NPCs fighting.

It’ll add an extra factor for devs to consider but it’s not as crazy as you’re making it out to be.

No but the PS5 clocks are much higher. Besides the XBO had more issues than just the lack of compute units.

Its not to dis-similar, the PS5 has about 20% higher clock then the xsx gpu which is only 6% more then what the x1s was over the ps4, also the memory bandwidth advantage the ps4 had over the x1s is roughly the same as the xsx over ps5 (25%).

Another interesting things is Cerny never talk about “higher tides lift all boats” this gen, probably because the xbox ones gpu was clocked higher. Cerny is a talented system architect,but he is also quite the salesman too.

The memory bandwidth advantage for the PS4 is much more than that. If you’re talking about the eSRAM, sure but that small pool of ram can only be used for a specific subset of operations. That’s not to say developers haven’t done an amazing job working around the limitations but the XBO really is a Frankenstein of a machine.

I do agree about Cerny’s comment about the higher tides lifts all boats but that’s not exclusive to him. The Xbox hardware team was saying the same thing for the XBO. There is some truth to it and it’s one of the reasons the XBX and XSX are also clocked relatively high. There’s definitely no denying that the Series X will have a power advantage, I just don’t see the gap being similar to the XBO - > PS4.

That’s why I am thinking it runs a similar management system as the GPU. They have the SSD running at a faster max speed, but it will be load dependent. So when doing smaller tasks there will definitely be a speed benefit, but when there is a heavy load it will throttle back down to more normal levels. It is still a clear advantage though if we assume normal levels are Xbox levels. It just won’t be doing any sustained heavy lifting at the faster rate.

Anyway I’m sure Sony 1st party games will take full advantage of all these little things and come out looking great. That is their bread and butter anyway.

I agree, my original comment you replied to was just about higher clocks.

Based on the past few decades of pc gpus and console GPUs cus and clockspeed have not mattered, what matters is the ultimate performance of the GPU, the cu and clockspeed combinations are just a means to an end.

I wish Cerny went into more detail about the advantages of higher clocks, because I do sense some embellishment and salesmanship.

We will see soon enough when the PS5 is compared to lower clocked PC of the same architecture and tflops. Im very curious how the PS5 compares to a 5700xt in games with no Raytracing, my gut is telling me its going to be very very close.

The advantages of higher clocks is that it speeds up every aspect of the GPU (pixel rate, vertex, ALU, draw call, etc.) where more CUs mostly speeds up ALU and shader performance.

I wonder how this will translate to game performance.

As long as there are no major bottlenecks, it won’t make a difference with the lower clocks on the Series X. 1.8Ghz isn’t slow at all for a GPU, especially in a console. Could be wrong but I wouldn’t be surprised if the PS5 was ALU bound before the Series X hit a bottleneck. While Cerny is correct in his higher tides lift all boats analogy, he’s making the most of a situation he was stuck in to support BC.

Real world performance.

Triangles per second PS5 8.92 Billion Xbox x 7.3 Billion

Triangle culling sec PS5 17.84 Billion Xbox x 14.6 Billion

Pixel fillrate PS5 142.72 Billion sec Xbox x 116.8 Billion sec

Texture fillrate PS5 321.12 Billion sec Xbox x 379.6 Billion sec

Where did you get this info from?