I dunno about that, Ms didn’t exactly tried to hide anything regarding specs and the system features. They did made the same argument that raw specs didn’t mean everything. And definitely tried to deflect the conversation with the argument that they traded ALU performance for extra cache with esram, but in the end that would give them a performance advantage, but for example, they were really forthcoming about feature level of their gpu, and many of the details.

There really could be some interesting things happen in the optimization process: You profile your code which is to inefficient but runs at max frequency. You optimize it to use all GPU resources in the best manner. But now this code requires way more power, so it runs at a lower frequency. Ups.

One thing I was hoping for from this talk was figuring out how capable the ML capabilities are, and how capable its ray tracing capabilities are.

We’re seeing DLSS 2.0 work wonders on nVidia cards but their a Tensor cores seem far more capable than the solution of using the CUs for ML.

I get the same impression with ray tracing. Ray traced Minecraft looks incredible on nVidia cards and looked unimpressive on the XSX. I imagine RT will be used sparingly for things like reflections and GI. But with engines like UE5 having it’s own comparable GI solution maybe devs will stick to just reflections, audio, etc.

I am keeping in mind the fact that these consoles will sell for $499-599 and that the cards I’m comparing them to would sell for $600 alone. But still it looks like AMD hadn’t made any breakthroughs in regards to ML & RT.

Is it possible for MS to overclock a bit or is it too late for that?

No, Phil Spencer already did a retail console unboxing at home. But it really doesn’t make sense anyway to mess with their engineering design at this point when they have a very clear power advantage and likely more than the paper specs advantage in the real world when the PS5 doesn’t hit that upper variable mark under stress in particular.

Makes sense. Thanks!

I’m sorry, but did you just say path traced Minecraft was ‘unimpressive’ on XSX?! 0.0

There are better uses of DLSS than upscaling the full frame imho. MS has at least 1 studio doing texture upres at runtime using ML. It is working “scarily well” according to MS. They plan to ship that game, whichever one it is, with low res textures and let ML upres as ya move through the scene.

That means much faster download times, much smaller downloads sitting on your SSD, vastly smaller I/O demands (!!!) and much better utilization of RAM…without any visual drawbacks at all in the end result. They can use VRS to help keep the visual impression on screen nice and sharp at faux-4. ![]()

The minecraft demo was a direct port of the Nvidia code base on SX, nothing was changed or optimized compared to what they had on Pc itself at the time.

I see no reason to do this.

- they are in front from a spec perspective

- they have balanced out the cooling so the console can be quiet with the current clock

- the lower the clock the better the yields. overclocking would just constrain the amount of chips of a wafer you can use in the console. makes it more expensive. not something I want.

Great points!!

I understand that but my concern is about the capacity, not the capability. For example, you could use ML to upscale the output, upscale textures to be razor sharp, and implement HDR all for each frame if you had enough power. But is it capable of running the emulator and running all of those at the same time? Is the total capability of 97 trillion INT4 ops per sec enough for all that when much of that is spent on the emulator? That’s my concern. Tensor cores on many Nvidiadia cards seem far more powerful so my fear is that you won’t see the same level of performance boost.

But I could be wrong, and that’s why I asked. I suspect there’s more to it than raw operations. Maybe AMD and MS have some key optimizations.

Note that I didn’t get into MS’s own upscaling algorithms. They could be better than DLSS 2.0. These algorithms could be made more effecient over time. I’m just speaking to the hardware capacity itself. This is built on my impression that AMD is behind Nvidia when it comes to this tech.

So to be clear, I’m not saying I’ll be useless on the XSX. Things like AI upscaling is why I think the performance delta between the XSX and PS5 will be greater than people think. Things like AI upscaling will ensure rock solid 60fps and 120fps games. My question is whether there is enough power to do it as well as say, a 2080Ti. Price wise you’d have to say no, but maybe some features and optimizations make RDNA2 very comparable in that regard.

And my Minecraft RT demo impressions have to do with performance and how it looked overall.

Some guy from Ms spoke about reconstructing textures as they are loaded in real time. Ms also has a research for that as means of compression.

It may be that there are costs associated with them, but it’s another tool devs have at their disposal when designing a game.

I dunno how XSX ML compares to Nvidia so can’t answer your question meaningfully. I do think they did cover these metrics in the Hot Chips presentation, just not directly like for like comparisons to Nvidia.

I dunno what more anyone can ask for from the Minecrafft demo. It’s path tracing. On a console. That alone is mindblowing.

I’m excited about the audio in this box since it will be retiring my 1S in the theater room. I’m looking forward to the audio improvement, and I thought the 1S was already pretty good at Dolby Atmos and DTS.

I think Gears 4 and 5 are good indicators of what to expect. They already do the whole shebang: dynamic audio sources that are propagated through the scene geometry.

However to achieve that they go through a pre baking process and the scene must be somewhat static. With the audio chip being so much more capable they are now able to skip the pre baking and propagate audio in real time, so it will be used in more games hopefully.

I think Super Resolution upscaling has the best chance to work with the emulator and not a normal next gen game. 360 BC already runs on Xbox One S, so Series X has over 20 Teraflops FP16 left for upscaling algorithms. I’m skeptical about DLSS like stuff in normal games but this here could work out. If publishers give their ok.

For those of you who are interested…

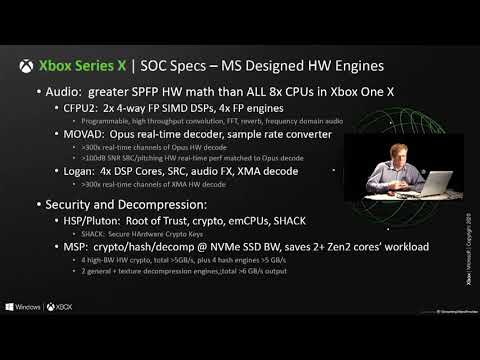

This is the full Xbox Series X System Architecture Conference at Hot Chips 2020. Speakers are Jeff Andrews & Mark Grossman, Microsoft.

Full Xbox Series X System Architecture Conference

PS4 pro did something after launch called boost mode.

All something like that is possible now. But i personally don’t think it will happen

Temp readings for the box shows it’s operating at well below temperatures that the system is actually able to cool. So they definitely have the thermal room to overclock if they wanted.

But I think that’s part of how they design consoles now since the X, really overshoot the cooling system so that’s not an issue when fining tuning the performance.

And X was the same, they didn’t overvlocked it despite running at significantly lower temperatures than Pro.

Interesting that they note they added silicon logic (transistors) specifically for machine learning. Before this most thought that the series x just used it’s CUs for machine learning, but nope they have hardware specifically dedicated for that task. It’s not tensor cores, but it’s something.